Advertisement

The EU AI Act is a major step toward regulating artificial intelligence with real enforcement. Its goal is to protect users, ensure safety, and increase transparency. But as it focuses on large providers, open-source developers are wondering how they fit in. Hugging Face, a central platform for open machine learning (ML), has raised concerns.

The issue is that open ML is not like corporate AI. It’s distributed, transparent, and built by communities. Treating it the same way risks discouraging collaboration and experimentation. Regulations must recognize how open ML operates if they want to support innovation without closing it off.

Open ML isn't just open code. It's a way of building AI where models, training scripts, datasets, and benchmarks are shared publicly. Platforms like Hugging Face and GitHub support this open exchange. It speeds up progress and allows smaller teams or individuals to contribute meaningfully. Open ML lowers the barrier to experimentation and drives reuse, especially in places with limited resources.

The EU AI Act introduces risk-based categories, requiring compliance for systems in higher-risk domains. But many of these rules could apply to people who are simply sharing models. A developer fine-tuning a text model for research might suddenly be responsible for documentation, registration, or even unintended downstream misuse.

This is where things don’t align. Open ML doesn’t have rigid release gates, version controls, or centralized monitoring. Models often evolve through forks and community input. Holding an individual accountable for every future use of a shared model discourages openness. It may even drive development into private channels to avoid scrutiny.

Most open contributors already take responsibility. Many models on Hugging Face include model cards, explain data sources, and set clear terms. But applying commercial-level compliance rules to them isn’t practical. It risks turning a collaborative field into a restricted space.

Hugging Face has actively joined policy talks around the EU AI Act. They’ve pointed out how the law risks lumping researchers with commercial deployers. A lab releasing a general-purpose model might be treated like a firm deploying an AI medical device. The intent to regulate high-risk use is fair, but the scope needs precision.

One of Hugging Face’s key messages is that not all AI models are applications. Sharing a general-purpose model is not the same as building a decision-making system. If the law treats every model as a product, open science suffers.

They argue for tiered responsibility. The person uploading a model should not carry the same legal burden as someone deploying it in a hospital or courtroom. Developers can offer transparency, but they can’t predict or control every use. This needs to be built into the legal language so contributors aren’t punished for things beyond their reach.

The platform has also worked on tools to support better documentation. Hugging Face encourages model cards, training summaries, and ethical notes. But they resist turning this into a regulatory checklist that discourages sharing. Documentation should be easy, useful, and flexible—suited for open collaboration, not corporate audits.

Open ML doesn’t mean unregulated. The community often flags issues, revises datasets, and retracts harmful models. But it works through shared norms, not top-down enforcement. The EU AI Act, in contrast, is built on a centralized model. It expects clear providers, defined responsibilities, and audit trails.

That works for companies but not for ecosystems. Open models get reused, remixed, and fine-tuned in unpredictable ways. If the original author is held liable for every version, sharing becomes risky. That's a loss not just for developers but for the broader AI community.

A better structure would be layered responsibility. Share model? You document what you can. Fine-tune it? You add your changes. Deploy in a sensitive domain? Then, you follow risk-based requirements. This mirrors how software is treated: Linux is open, but when it's used in critical systems, those systems need approval.

Hugging Face supports this modular view. It fits how open ML works and ensures accountability without burdening people unfairly. They’re building tools to help contributors document their models and add governance features without blocking experimentation.

The goal isn’t to avoid regulation. It’s to shape regulation that respects how open ML actually functions. That way, contributors can share safely, and the public stays protected where it matters most.

Some AI uses do need strict oversight—like policing, healthcare, or public scoring systems. But the Act should avoid treating all model releases the same. If it targets every model sharer with compliance duties, it may end up silencing the very people driving progress.

Hugging Face’s policy work reflects this balance. They want safety and openness to coexist. But they don’t want the burden of regulation to land unfairly on small teams or individuals. There’s a big difference between open publishing and commercial deployment. The law must reflect that.

Clearer boundaries between research and use, more support for voluntary documentation, and sensible limits on liability can go a long way. Without these, the open ML world might close itself off—not for lack of ideas, but out of fear.

The EU AI Act is the first of its kind. That makes it important to get it right. It should lead by understanding—not just enforcing. Open ML doesn't resist responsibility; it just needs responsibility to be shared based on roles and risks, not assumptions.

The future of AI depends on both regulation and open collaboration. The EU AI Act is trying to protect users from risky systems, but it must do so without shutting down experimentation. Hugging Face’s call is simple: treat open ML for what it is—collaborative, distributed, and transparent. Open contributors can’t be expected to carry the same obligations as deployers of high-risk systems. If the law can make that distinction clear, it helps everyone. We get better AI and better safety. The open ML ecosystem continues to thrive without being pushed into the shadows. And regulation serves its true purpose: responsibility without overreach.

Advertisement

Why is Tesla’s Full Self-Driving under federal scrutiny? From erratic braking to missed stops, NHTSA is investigating safety risks in Tesla’s robotaxis. Here’s what the probe is really about—and why it matters

Discover seven powerful ways AI helps manage uncertainty and improve resilience in today's fast-changing business world.

Explore the underlying engineering of contextual ASR and how it enables Alexa to understand speech in context, making voice interactions feel more natural and intuitive

Microsoft and Nvidia’s AI supercomputer partnership combines Azure and GPUs to speed model training, scale AI, and innovation

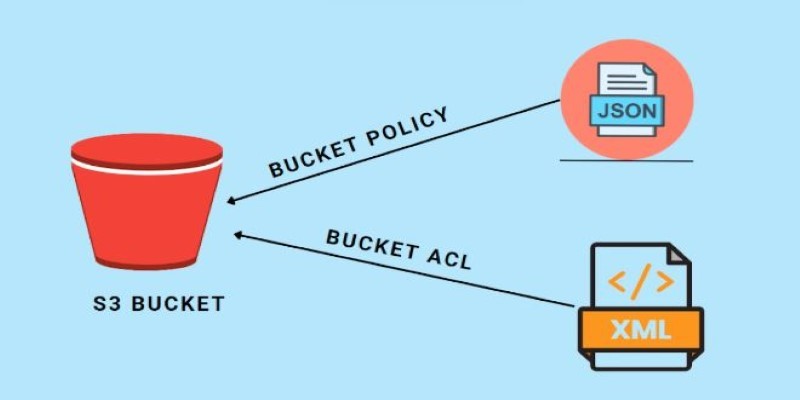

How AWS S3 buckets and security work to keep your cloud data protected. This guide covers storage, permissions, encryption, and monitoring in simple terms

The UK’s AI Opportunities Action Plan outlines new funding, training, and regulatory measures to boost artificial intelligence innovation. Industry responses highlight optimism and concerns about its impact

How NVIDIA’s Neuralangelo is redefining 3D video reconstruction by converting ordinary 2D videos into detailed, interactive 3D models using advanced AI

Apple may seem late to the AI party, but its approach reveals a long-term strategy. Rather than rushing into flashy experiments, Apple focuses on privacy, on-device intelligence, and practical AI integration that fits seamlessly into everyday life

Discover how 9 big tech firms are boldly shaping generative AI trends, innovative tools, and the latest industry news.

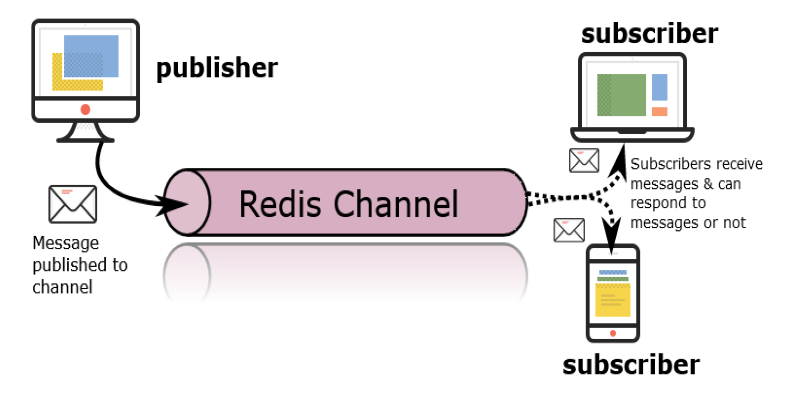

Need instant updates across your app? Learn how Redis Pub/Sub enables real-time messaging with zero setup, no queues, and blazing-fast delivery

Why AI training is increasingly being shaped by tech giants instead of traditional schools. Explore how hands-on learning, tools, and real-time updates from major tech companies are changing the way people build careers in AI

OpenAI robotics is no longer speculation. From new hires to industry partnerships, OpenAI is preparing to bring its AI into the physical world. Here's what that could mean