Advertisement

Training a machine learning model is half the story. A Jupyter notebook of high accuracy looks great on screen. Still, if the model remains stuck on the screen, it adds no business value. Deployment is the process by which the ideas are made available for use. For the modern data scientist, analysis can't be the be-all and end-all of the skill set.

Teams need talent that can work across data prep to production. This shifting has made Deployment a milestone on the way to becoming a full-stack solution. In this article, we'll discuss how new-age data scientists are bridging the divide between research and real-world applications. You'll see how models evolve from training to API, and then to Docker containers, which promote reliability and scalability.

In the past, the division of labor was distinct. Engineers deployed the models, but scientists trained them. The boundaries were sharp and handovers were a routine. That world is disappearing. Data scientists are increasingly expected to be an active part of the process of delivering production-ready solutions. It's not just about cleaning up data and fine-tuning models; it's about ensuring that the predictions reach end users seamlessly.

It is not a burden - it's an opportunity. You also get greater visibility into how your work is performing out on the ground when you are more involved in the lifecycle. You can watch the model's impact on decision-making, products, or customer experiences. That's the impact, and it's worth making the full-stack journey.

The term "full-stack" often strikes fear in people. They imagine it as the mastery of every technology. Full-stack data scientists don't do everything, but they are skilled enough to make practical contributions. This mindset means curiosity extends beyond the notebook. How will the model get new data? Who communicates with the output? So, what does it take to keep it running reliably? Finding the answer to these questions is the essence of the role.

Equally as important is collaboration. You might not be the architect of a server, but you should know how your API will be integrated. You may not design the product, but you can explain how predictions support the features. Being full-stack is not so much about mastering tools as it is about connecting the dots throughout the lifecycle.

As mentioned earlier, Deployment is necessary to determine the model's output and its benefits to businesses in the real world. The following steps outline the process for deploying the model.

The deployment process begins with clean and structured data. Preprocessing, including missing value imputation, categorical encoding, and feature scaling, prepares the data for a model that can be applied outside the lab. Skipping these steps leads to brittle systems later. Consider a simple classification problem, such as identifying the species of flowers from the Iris dataset. A logistic regression model is sufficient to demonstrate the process. Once trained, the critical step is saving the model for reuse. It is done using joblib or pickle, which serializes the model into a portable file. This file is your artifact. Instead of having to retrain every time, you now have a version ready for production. It's where you make your work look like software, rather than an experiment. Without this step, the remainder of the deployment journey doesn't have a foundation.

A serialized model is static; it cannot serve predictions independently of the original model. That's where APIs can help. An API provides other applications with a specified way to send data and receive predictions. FastAPI is one of the best frameworks for this purpose. Lightweight and fast, it enables you to define endpoints in just a few lines of code. A POST endpoint, for instance, takes new information, generates output by using the model, and returns the output. A GET endpoint can be used to check whether the service is healthy or to provide metadata. Some of the functional endpoints could be:

It's the layer of API that converts your saved file into an interactive service that's available to the world at large.

Even if there is a working API, it still presents a problem to deploy because environments may differ. A server might have different versions of Python or dependencies that are not compatible with your laptop. It is where Docker comes in to solve the problem. Docker allows you to package your whole application (model, API, dependencies) in a container. A container acts the same way wherever it goes, so "it works on my machine" errors disappear. For example, to create an image container, you create a Dockerfile. This file defines the base image (such as Python), installs the requirements, copies your code, and sets the start command. Docker can create an image from this Dockerfile. That picture is the blueprint that you use to spin up containers. The result is a lightweight, portable unit that can be easily deployed. So whether you use it on your laptop, a cloud service, or production servers, the model will behave consistently every time.

Docker brings structure and reliability to model Deployment. Its advantages are clear:

For the data scientist, this means the difference between a flimsy demo and a production-grade one. Your model is something teams can trust - repeatable, stable, and easy to scale. Docker isn't just a tool; it's what can make the difference between your work going from theory to power.

Deployment is where models pay off. A project that's stuck in a notebook, no matter how accurate, is only an experiment. When combined with APIs and Docker, it becomes a solution that makes a tangible difference in the real world. Becoming full-stack does not require knowing every tool in detail. It's about seeing the big picture, getting your work done across the finish line. For today's data scientists, the job doesn't stop at 95% accuracy, but delivers real impact to production.

Advertisement

Explore how Nvidia's generative AI suite revolutionizes the Omniverse, enhancing 3D simulations, automation, and efficiency

Explore the underlying engineering of contextual ASR and how it enables Alexa to understand speech in context, making voice interactions feel more natural and intuitive

Why AI training is increasingly being shaped by tech giants instead of traditional schools. Explore how hands-on learning, tools, and real-time updates from major tech companies are changing the way people build careers in AI

Explore how AI enhances employee performance, learning, and engagement across today's fast-changing workplace environments.

Learn to boost PyTorch with custom kernels, exploring speed gains, risks, and balanced optimization for lasting performance

How to use a Python For Loop with easy-to-follow examples. This beginner-friendly guide walks you through practical ways to write clean, effective loops in Python

Learn the top 5 strategies to implement AI at scale in 2025 and drive real business growth with more innovative technology.

Can machines truly think like us? Discover how Artificial General Intelligence aims to go beyond narrow AI to learn, reason, and adapt like a human

Learn how advanced SQL techniques like full-text search, JSON functions, and regex make it possible to handle unstructured data

Discover seven powerful ways AI helps manage uncertainty and improve resilience in today's fast-changing business world.

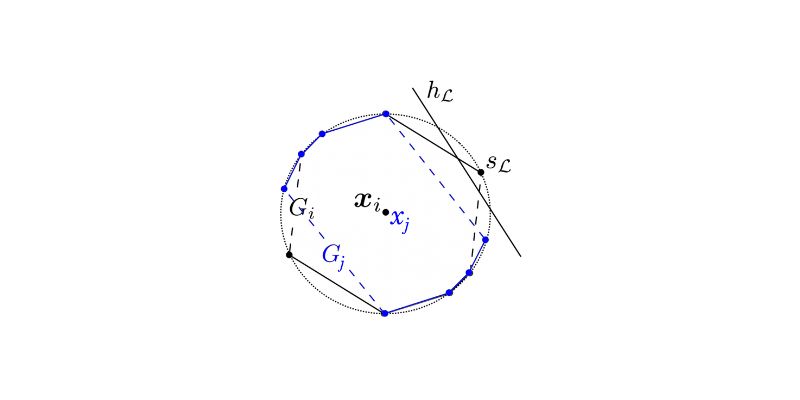

Explore statistical learnability of strategic linear classifiers with simple walkthroughs and practical AI learning concepts

AI is reshaping the education sector by creating personalized learning paths, automating assessments, improving administration, and supporting students through virtual tutors. Discover how AI is redefining modern classrooms and making education more inclusive, efficient, and data-driven