Advertisement

Due to its adaptability and developer-friendly architecture, PyTorch has become a widely used framework for deep learning. Its basic features are used by academics, students, and professionals for research and production systems. Still, performance is always a concern when training large models or processing complex data. Developers investigate solutions to boost accuracy while reducing execution times.

One way is to create custom kernels to substitute for slower built-in functions. Kernels can deliver major speed gains but also add significant complexity. Before adoption, it is essential to understand how and why these strategies work. They offer real performance benefits but also introduce notable risks. This article examines the approaches, challenges, and trade-offs associated with maximizing speed. The goal is balance, not reckless optimization.

Low-level processes known as PyTorch kernels operate directly on hardware. They power numerous operations within neural network models. Depending on the situation and the task's requirements, kernels can target CPUs or GPUs. Typically, developers use the framework's prebuilt kernels. Custom kernels, on the other hand, provide precise control over computation paths and memory usage. They are written in C++ for CPU execution or CUDA for GPU acceleration.

Developers can strive for speed gains by substituting specialized code for general operations. It reduces the overhead of one-size-fits-all designs. However, developing such kernels requires in-depth technical knowledge and proficient debugging skills. Newcomers often underestimate the steep learning curve that lies ahead. Early risks are minimized through frequent testing, small prototypes, and solid documentation. Careful planning can lead to improvements in performance.

Custom kernels increase speed by removing layers of abstraction within PyTorch. Prebuilt kernels need to support a wide range of situations. When certain operations are performed repeatedly, that flexibility leads to inefficiency. Writing a kernel that excels at a single task minimizes the need for additional branching. For example, tailoring matrix multiplications to a fixed shape can improve speed.

They provide tighter control over memory access and GPU threads, while also reducing unnecessary CPU–GPU data transfers. Models train and infer faster when these delays are removed. For heavy workloads, benchmarks frequently display improvements of double digits. However, the quality of implementation affects actual performance. However, not every custom kernel delivers better results. Success requires careful measurement, profiling, and iteration. Thorough iteration guarantees that kernels consistently achieve the promised acceleration.

Developing a custom kernel may seem appealing, but it demands significant technical expertise. Developers need to understand memory management, parallel processing, and CUDA programming to utilize these technologies effectively. Debugging Python scripts is easier than debugging GPU code. Errors can silently generate incorrect outputs or cause training runs to crash. The risk grows as projects become larger and more complex. It can take developers weeks to find minor memory handling errors.

The significant drawbacks include the heavy time investment and mental strain. After kernels are put into production, many teams underestimate the cost of maintenance. Finely tuned code is frequently broken by hardware changes. Compatibility problems can also arise from updating PyTorch versions. Due to their steep learning curve, custom kernels are not suitable for casual experimentation. When the advantages outweigh the long-term risks, they are more effective at addressing critical performance bottlenecks. Strong technical skills and careful planning are essential for success.

Custom kernels can increase speed, but they also have unintended consequences. Inaccurate results that go unnoticed are one significant problem. Subtle errors during training or inference can result from small coding errors. Without obvious warnings, those errors could permeate model outputs. Another risk is poor portability across different GPUs and environments. A kernel that works well on one device might not work well on another.

It becomes difficult to maintain consistent performance across various configurations. Operating at a low level with full access, kernels also introduce security risks. Code that is poorly written can lead to vulnerabilities or crashes. Teams must weigh potential acceleration against these risks. Chasing speed without caution often results in fragile systems. Risks are decreased by documentation, version control, and cross-environment testing. Adopting responsible practices helps mitigate the negative effects of optimization.

Developers should balance code maintainability and performance enhancements to ensure optimal results. Custom kernels increase long-term responsibility while offering short-term benefits. Teams are required to update their strategies, maintain test suites, and keep documentation up to date. Without proper upkeep, kernels quickly become a burden. As frameworks change, models stay dependable thanks to maintainable code. It reduces the onboarding expenses for new developers.

Concentrating solely on important bottlenecks is another balancing factor. It is pointless and ineffective to optimize every process. Profiling helps determine the few most important functions. Rewriting only critical functions saves effort and time. Cooperation is also important. Distributing maintenance tasks among the community is facilitated by sharing optimized kernels. Open-source contributions build trust and stability. Choosing the appropriate battles rather than pursuing every possible speed boost is the key to balance. The ultimate goal is sustainable, long-term performance.

Developers should think about workable alternatives before creating custom kernels. PyTorch comes with several optimization tools. Without requiring significant code modifications, mixed precision training shortens computation times. DataLoader enhancements improve throughput by removing input bottlenecks. Models can be compiled and traced using TorchScript for quicker execution.

Highly optimized operations are already provided by CUDA libraries like cuDNN and cuBLAS. These tools frequently provide adequate performance without the need for custom code. Using quantization or reducing the model size are further options. Both methods greatly cut computing demands. PyTorch Distributed Data Parallel increases performance across several GPUs for distributed workloads. Investigating these built-in tactics reduces risks and saves time. Custom kernels should be used only when essential, after gradual optimization with safer built-in methods. Solid foundations always precede advanced techniques.

Custom kernels in PyTorch provide remarkable performance gains, but they also present significant challenges. Developers must carefully balance the risks and rewards. Long-term maintenance, hidden errors, and debugging complexity are the darker aspects. Before writing kernels, responsible teams begin by profiling and utilizing built-in optimizations. When custom kernels are necessary, thorough testing and documentation are essential. When used properly, kernels can significantly enhance productivity. Sustainable practices ensure that gains remain stable over time. PyTorch developers can advance while avoiding unnecessary pitfalls with smart adoption.

Advertisement

Learn the top 5 strategies to implement AI at scale in 2025 and drive real business growth with more innovative technology.

Discover seven powerful ways AI helps manage uncertainty and improve resilience in today's fast-changing business world.

Data scientists should learn encapsulation as it improves flexibility, security, readability, and maintainability of code

How NVIDIA’s Neuralangelo is redefining 3D video reconstruction by converting ordinary 2D videos into detailed, interactive 3D models using advanced AI

Not sure how to trust a language model? Learn how to evaluate LLMs for accuracy, reasoning, and task performance—without falling for the hype

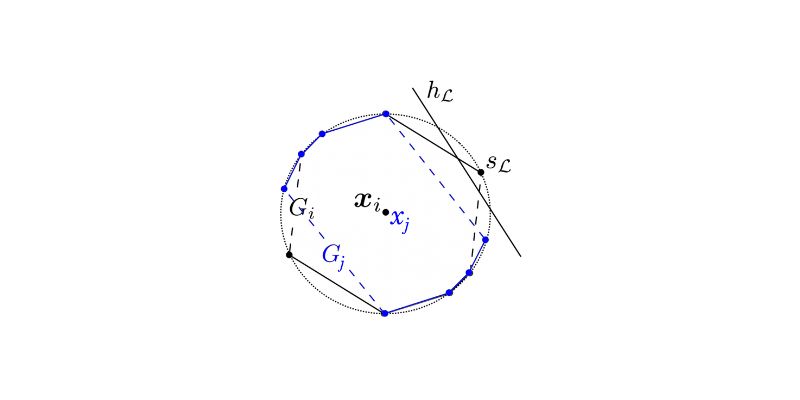

Explore statistical learnability of strategic linear classifiers with simple walkthroughs and practical AI learning concepts

Explore how AI is boosting cybersecurity with smarter threat detection and faster response to cyber attacks

AI is reshaping the education sector by creating personalized learning paths, automating assessments, improving administration, and supporting students through virtual tutors. Discover how AI is redefining modern classrooms and making education more inclusive, efficient, and data-driven

Explore the underlying engineering of contextual ASR and how it enables Alexa to understand speech in context, making voice interactions feel more natural and intuitive

Learn to boost PyTorch with custom kernels, exploring speed gains, risks, and balanced optimization for lasting performance

Discover how 9 big tech firms are boldly shaping generative AI trends, innovative tools, and the latest industry news.

What happens when energy expertise meets AI firepower? Schneider Electric and Nvidia’s partnership is transforming how factories are built and optimized—from virtual twins to real-world learning systems