Advertisement

Self-driving technology has long promised a world where cars think for us, drive for us, and, ideally, make roads safer. But when things go off script, they do so in ways that human drivers rarely do. That’s exactly what’s happening with Tesla’s AI-controlled robotaxis. In what was supposed to be a breakthrough for autonomy, these cars are now under the microscope—again—after reports of unpredictable and risky behavior behind the wheel.

The National Highway Traffic Safety Administration (NHTSA) has stepped in to investigate, and for good reason. Erratic lane changes, missed stops, and inconsistent braking have all raised concerns about how reliable Tesla's Full Self-Driving (FSD) mode really is when left entirely in charge. The very tech that's meant to eliminate human error is now being questioned for creating new types of errors—ones we’re still trying to understand.

It wasn’t one isolated case that tipped the scales. Over the past few months, multiple reports have emerged from cities where Tesla’s autonomous ride-hailing program has started to take hold. Users and bystanders alike have captured videos showing Tesla robotaxis swerving mid-intersection, braking abruptly without obstacles, or hesitating at green lights. The concern here isn’t about bugs—it’s about safety.

NHTSA responded by requesting detailed logs, internal assessments, and software revisions from Tesla. They’re not only looking at the cars’ real-time behavior, but also how Tesla internally defines “safe operation” and what triggers override protocols. The agency’s job is simple on paper: make sure these vehicles meet basic safety standards. But in practice, determining whether AI decisions count as "safe enough" isn’t so clear-cut.

And Tesla’s communication hasn’t exactly made things easier. Over the years, the company has oscillated between calling their software “autopilot,” “full self-driving,” and now a “robotaxi,” often leaving the public to guess just how autonomous the cars really are. This blurred messaging only complicates things for regulators trying to separate bold claims from real-world performance.

At the core of Tesla's system is a neural network that learns from vast amounts of driving data. Every Tesla on the road becomes a rolling contributor to this model, feeding back real-world data. That sounds impressive—and in many ways, it is—but what the recent incidents show is that learning doesn’t always mean understanding.

A human driver sees more than lines and lights. We pick up on eye contact with pedestrians, we sense when another driver is being aggressive, and we understand subtleties like construction signs or hand gestures from traffic officers. Tesla’s robotaxis are good at recognizing objects, but poor at understanding intent. That’s a big deal when dealing with unpredictable environments like city streets.

Another recurring theme is inconsistency. One robotaxi might stop calmly for a pedestrian. Another, in the same situation, may roll forward slightly before braking. This inconsistency isn’t just annoying—it’s dangerous. It means nearby drivers and pedestrians can't predict how the car will behave, which increases the risk of accidents.

Tesla’s system uses internal confidence scores to determine how certain it is about its next move. The problem is, those scores don’t always reflect reality. A robotaxi might be 95% confident that a light is green when it’s actually red due to a reflection or lens flare. Unlike a human, it doesn’t second-guess; it proceeds. That split-second decision, made with full AI certainty and zero human doubt, can be catastrophic.

The NHTSA's probe is focused, but layered. They’re not just checking for faulty behavior—they’re trying to understand if the system itself is flawed in design or deployment. Several points define their current inquiry:

They want to know if Tesla has a clearly defined escalation protocol when the robotaxi encounters a scenario it doesn’t understand. Does it slow down? Pull over? Or just guess?

In earlier versions of FSD, Tesla required drivers to stay alert and ready to take over. But robotaxis are marketed as not needing a human in the car at all. That shift raises a key regulatory question: when no one is behind the wheel, who’s responsible in the event of a mistake?

Another concern is whether Tesla is accurately logging and reporting all incidents, not just the ones with public attention. There have been claims that some near-misses or odd behaviors go unflagged unless there’s a formal complaint.

Lastly, NHTSA is interested in how Tesla defines acceptable risk for public rollout. Are cities being chosen based on infrastructure readiness, or simply because they pose fewer legal obstacles?

These questions aim at one central issue: is Tesla moving too fast with not enough guardrails?

Tesla isn't the only player in autonomous transport, but it is the loudest. That spotlight comes with scrutiny. If this investigation results in sanctions or forced rollbacks, it could reshape not just Tesla's program but also how all self-driving initiatives are rolled out across the country.

There’s also the matter of public perception. Right now, trust in self-driving tech is teetering. Incidents like these don’t just delay innovation—they drain consumer confidence. If robotaxis are to become a real part of urban life, they need to feel safe in more than just a technical sense. People have to believe they’re safe, too.

That belief is hard to earn and easy to lose. Every video of a Tesla hesitating in an intersection or stopping without cause chips away at the promise of autonomy. Even if no one gets hurt, the spectacle itself is enough to stir doubt. And in the end, it’s not the promise of technology that matters most—it’s the execution. You can’t beta test safety.

Tesla’s robotaxi dream is ambitious, no question. But the recent string of erratic driving behavior—and the federal attention it’s drawn—is a reminder that autonomy can’t shortcut accountability. The stakes aren’t hypothetical. They’re sitting at busy intersections, crossing crowded streets, and sharing lanes with human drivers.

The NHTSA's questions aren't a blockade to innovation. They're a necessary pause. Because before we hand over the wheel for good, we need to be absolutely sure that the driver we're trusting—no matter how smart—knows what they're doing.

Advertisement

Domino Data Lab joins Nvidia and NetApp to make managing AI projects easier, faster, and more productive for businesses

Explore the underlying engineering of contextual ASR and how it enables Alexa to understand speech in context, making voice interactions feel more natural and intuitive

Explore how Nvidia's generative AI suite revolutionizes the Omniverse, enhancing 3D simulations, automation, and efficiency

Meta is restructuring its AI division again. Explore what this major shift in the Meta AI division means for its future AI strategy and product goals

AI is reshaping the education sector by creating personalized learning paths, automating assessments, improving administration, and supporting students through virtual tutors. Discover how AI is redefining modern classrooms and making education more inclusive, efficient, and data-driven

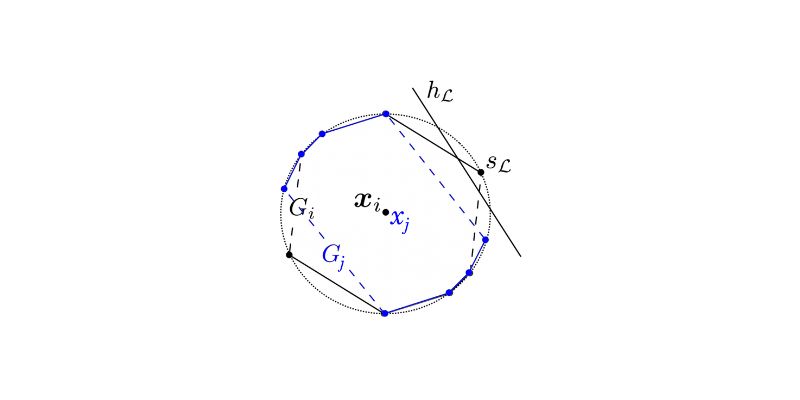

Explore statistical learnability of strategic linear classifiers with simple walkthroughs and practical AI learning concepts

Discover how AI in weather prediction boosts planning, safety, and decision-making across energy, farming, and disaster response

Learn how AI innovations in the Microsoft Cloud are transforming manufacturing processes, quality, and productivity.

Learn about the top 5 GenAI trends in 2025 that are reshaping technology, fostering innovation, and changing entire industries.

Explore how AI is transforming drug discovery by speeding up development and improving treatment success rates.

How to use a Python For Loop with easy-to-follow examples. This beginner-friendly guide walks you through practical ways to write clean, effective loops in Python

How AI Policy @Hugging Face: Open ML Considerations in the EU AI Act sheds light on open-source responsibilities, developer rights, and the balance between regulation and innovation