Advertisement

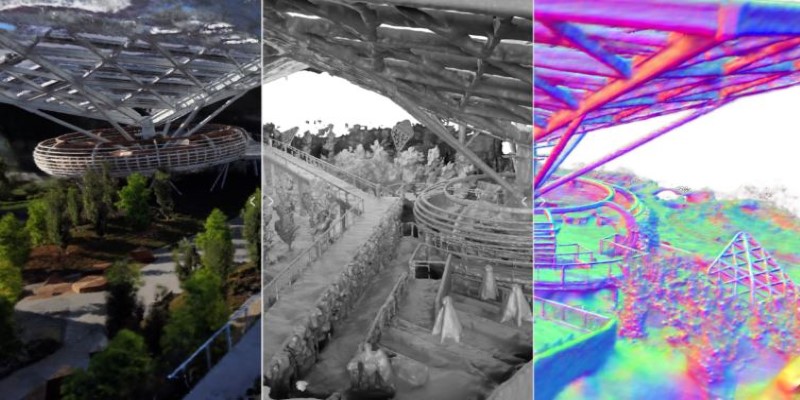

There’s a big shift happening in how we look at video. For decades, a video was just a series of flat images. Even with better resolution or clever editing, it was still stuck on the screen. Now, NVIDIA’s Neuralangelo is changing that. It can take regular 2D video and build a detailed 3D model from it—almost like sculpting a digital statue out of a short clip. This isn’t about special effects or filters. Neuralangelo uses AI to analyze how light, angle, and perspective work together and turns that into something you can explore in full 3D.

At the heart of Neuralangelo is a method called neural surface reconstruction. It’s based on neural radiance fields (NeRFs), a type of AI that learns to predict how scenes appear from different angles. While previous models, such as NeRF, could create decent 3D reconstructions, they had limitations in terms of texture, sharpness, and surface quality. Neuralangelo takes it further by combining neural fields with techniques inspired by classical sculpture—hence the name, a nod to Michelangelo.

The process begins with a regular video, often taken from a smartphone or drone. Neuralangelo analyzes the changes in perspective as the camera moves. It uses these shifts to figure out how objects are shaped and where they sit in 3D space. Unlike older methods that rely heavily on manual tagging or high-end hardware, such as LiDAR, this system learns from light and structure within the frames themselves. The result is a highly accurate, textured 3D model.

What makes Neuralangelo stand out is its sharpness and fidelity. It doesn't just generate rough shapes. It captures minute details—cracks in the stone, wood grain, and reflections on the glass. This level of detail matters, especially in industries where precision is non-negotiable, like game development, architecture, or robotics.

Neuralangelo’s most obvious use is in content creation. Game designers and filmmakers often spend weeks or months building environments by hand. Neuralangelo can cut that time drastically. For example, instead of modeling a real-world building from scratch, a developer can just film it with a phone and use the AI to create a detailed 3D version. That model can then be dropped into a game or a virtual set.

Architecture firms are also paying attention. Normally, creating a digital twin of a building involves scanning, drone mapping, or photogrammetry. Neuralangelo simplifies that by using standard video footage. A walk-through video of a construction site or heritage building becomes a tool for inspection, restoration planning, or virtual tours.

In robotics, the ability to reconstruct accurate 3D environments is critical. Robots often rely on sensors to understand their surroundings. If Neuralangelo can create precise maps from video, it could become a low-cost, high-efficiency alternative to expensive scanning tools. That’s especially useful in places like warehouses, disaster zones, or areas with limited access.

Another field where Neuralangelo can make a difference is education. Museums and universities could use it to create 3D versions of artifacts or historical locations from basic footage. Students and visitors can then explore them in virtual reality without being on-site. This changes how people engage with history, art, and science—not just reading about it but moving through it.

To understand why Neuralangelo matters, you have to look at what came before. Previous 3D reconstruction methods often relied on multi-view stereo or photogrammetry. These systems are sensitive to lighting, camera shake, and image quality. They also struggle with surfaces that are shiny, transparent, or repetitive in pattern.

Neuralangelo avoids many of these traps. By using neural fields and applying volume rendering techniques, a continuous and consistent model of the object or space is created. It's not just matching pixels. It's learning how the scene works in three dimensions and how it would look from every possible angle—even ones not shown in the video.

Another key improvement is surface fidelity. Many older reconstructions had a rubbery or melted appearance. Neuralangelo applies a more sculptural mindset, producing models with crisp edges, defined contours, and photorealistic textures. The AI "understands" materials better, making it easier to distinguish between stone, metal, or foliage from the video alone.

The model also benefits from smart training routines. It doesn't require thousands of images or massive datasets. A short clip, around 50 to 100 frames, can be enough. This is a significant advantage in real-world settings, where conditions are not always ideal, and large data collection is impractical.

Neuralangelo is still a research project for now, but it gives a clear look at where things are headed. As computing gets faster and AI models more efficient, the line between video and 3D will continue to blur. Everyday users might someday make their 3D content just by recording a moment on their phone. What used to require a whole team with advanced tools could become as simple as uploading a video.

There are challenges, of course. Processing 3D scenes at high quality still takes time and resources. While Neuralangelo is faster than many alternatives, real-time 3D reconstruction at this level is still a goal, not a feature. Privacy and copyright will also need to be discussed, especially if people start scanning public or private places in detail.

Still, what Neuralangelo shows is that AI isn’t just automating tasks—it’s expanding what’s possible. By turning ordinary video into something spatial, interactive, and real-looking, it changes the role of video in digital design, education, and exploration. We’re not just watching anymore. We’re stepping into it.

Neuralangelo is more than a technical upgrade—it's a shift in how we interact with digital content. It takes the passive nature of 2D video and flips it into something alive, walkable, and explorable. What was once a flat window now becomes a doorway into space. That opens up real opportunities not just for creators but for educators, engineers, and researchers. And the best part? All it takes is a video. That simplicity, combined with the depth of what it delivers, is what makes Neuralangelo stand out. It’s not just about better visuals—it’s about turning scenes into spaces you can experience.

Advertisement

Domino Data Lab joins Nvidia and NetApp to make managing AI projects easier, faster, and more productive for businesses

The ChatGPT iOS App now includes a 'Continue' button that makes it easier to resume incomplete responses, enhancing the flow of user interactions. Discover how this update improves daily usage

Explore how AI is transforming drug discovery by speeding up development and improving treatment success rates.

How AI-powered simulation is revolutionizing engineering practices by enabling faster, smarter design and testing. Explore key insights and real-world applications revealed at AWS Summit London

How NVIDIA’s Neuralangelo is redefining 3D video reconstruction by converting ordinary 2D videos into detailed, interactive 3D models using advanced AI

Learn how AI is being used by Microsoft Dynamics 365 Copilot to improve customer service, increase productivity, and revolutionize manufacturing support.

Discover AI’s latest surprises, innovations, and big wins transforming industries and everyday life.

Create an impressive Artificial Intelligence Specialist resume with this step-by-step guide. Learn how to showcase your skills, projects, and experience to stand out in the AI field

AI tools for solo businesses, best AI tools 2025, AI for small business, one-person business tools, AI productivity tools

AI is reshaping the education sector by creating personalized learning paths, automating assessments, improving administration, and supporting students through virtual tutors. Discover how AI is redefining modern classrooms and making education more inclusive, efficient, and data-driven

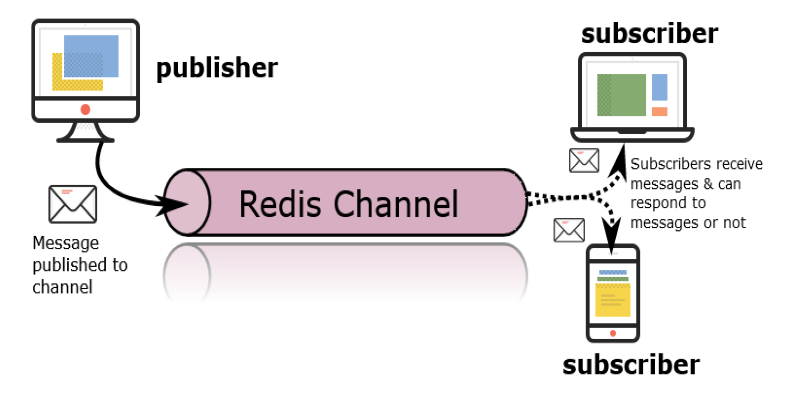

Need instant updates across your app? Learn how Redis Pub/Sub enables real-time messaging with zero setup, no queues, and blazing-fast delivery

Learn about the top 5 GenAI trends in 2025 that are reshaping technology, fostering innovation, and changing entire industries.