Advertisement

Low-level vision is the first step in how a computer sees. It deals with raw visual data—pixels, brightness, color shifts—and transforms this input into useful signals. This isn’t about recognizing objects or making decisions. It’s about detecting edges, cleaning up noise, and enhancing clarity.

Think of it like the eye gathering light before the brain interprets anything. Low-level vision prepares the image, removing the mess and highlighting structure. Everything built on top—face detection, object tracking, scene analysis—starts here. It’s the technical groundwork of computer vision, always working quietly in the background.

Low-level vision performs structural analysis on images. It doesn’t interpret content but identifies key visual patterns and prepares them for further processing.

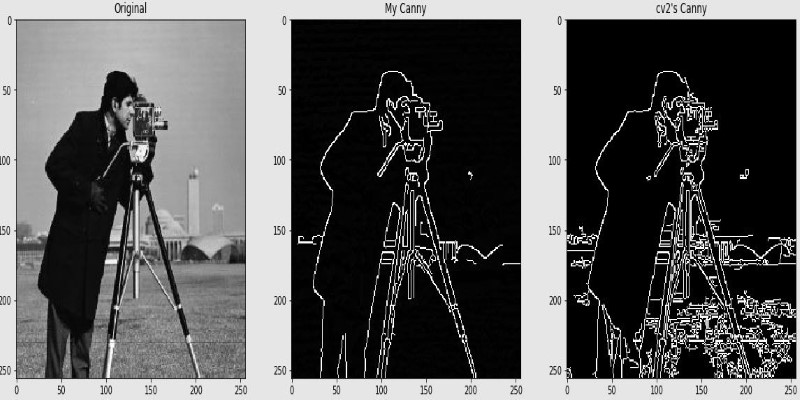

Edges mark boundaries where there’s a clear change in brightness or color. Detecting these gives shape to an otherwise flat image. Methods like Sobel filters or Canny edge detectors work by scanning for intensity changes in the pixel grid. This helps outline objects, patterns, and textures.

Once edges are found, the system locates stable and distinctive points—called features. These include corners, junctions, or textured areas. Feature extraction allows the computer to find matching areas across images. Algorithms like SIFT or ORB are good at capturing such features under varying lighting or rotation, which helps in applications like stitching images or tracking movement.

Visual data from real-world sources isn’t perfect. Cameras might capture grainy or blurred images, especially in motion or poor lighting. Denoising techniques remove irregularities while keeping the important details. Classic approaches include Gaussian or median filtering. Today, some use trained neural networks that can recognize common patterns of distortion and fix them automatically.

Restoration techniques fill in gaps or correct problems like motion blur. Super-resolution methods increase image clarity by rebuilding missing details. These processes are widely used in security, satellite imaging, and even in older film restoration.

Another task handled at this stage is identifying how far objects are and how they move. Using stereo vision—two slightly different images from separate viewpoints—the system can compare and calculate depth. This is called disparity mapping.

Motion is measured through optical flow, which detects how pixels move between frames. That’s useful for tracking moving objects, understanding speed, and supporting navigation. In robotics, drones, or automated driving, this real-time visual feedback is essential for spatial awareness and avoiding collisions.

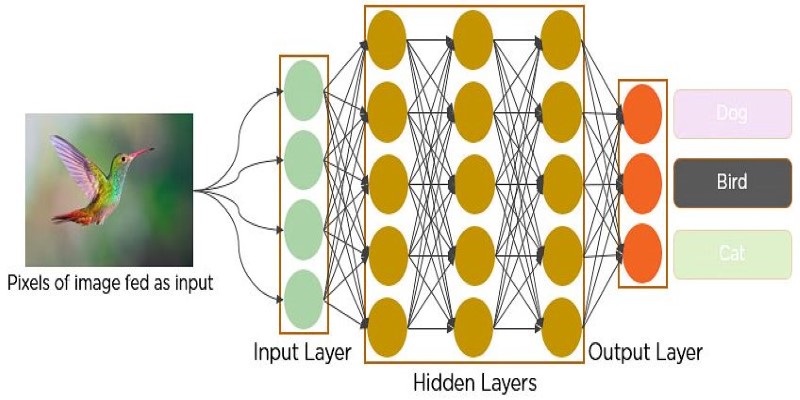

Deep learning has taken over many high-level computer vision tasks. Models like convolutional neural networks can automatically learn to identify features, patterns, or categories. But these systems still rely on clean, usable input.

Low-level vision provides that foundation. If an image is blurry, too dark, or filled with noise, even a well-trained neural network may misinterpret it. That’s why many pipelines include a low-level step to prepare data before it goes into the model. Better inputs mean better outcomes.

There’s also the benefit of transparency. Classical low-level methods do predictable things. When you apply an edge detector or denoiser, the result is explainable and consistent. This is useful in areas where trust and control are needed—such as medical imaging or industrial inspection.

Low-level techniques are also efficient. They don’t always require large models or GPUs, which makes them ideal for edge computing or low-power devices. In mobile phones, for instance, quick filters improve image quality before any AI takes over. They run fast, with minimal resource use.

In many systems today, traditional low-level vision works alongside neural networks. It handles noise reduction, structure detection, or enhancement, while the learning-based models take care of classification or prediction. The two approaches complement each other rather than compete.

Low-level vision is part of many real-world tools and devices. In mobile photography, it improves focus, removes noise, and balances lighting. Medical systems use it to clarify X-rays or MRI scans. In manufacturing, it checks product surfaces for flaws, identifying irregular edges or patterns that may signal defects.

In cars and drones, real-time edge detection and motion analysis help machines react to the environment. For satellites and aerial imaging, it enhances clarity and reduces atmospheric distortions. Even in augmented reality, low-level vision helps align digital objects with the real world by detecting surfaces and depth accurately.

However, challenges remain. Variability in lighting, complex backgrounds, or weak contrast can make it harder to extract edges or features. Surfaces that lack texture or have repetitive patterns confuse feature matchers. Extreme conditions, such as fog, glare, or fast motion, still test the limits of current techniques.

Research continues into improving adaptability. Some new methods use hybrid approaches—combining learned filters with traditional processing—to adjust to changing conditions. Event-based cameras, which detect only motion and change rather than full frames, are pushing a new kind of low-level vision that’s faster and more efficient.

These directions suggest that while the nature of low-level vision may evolve, its role will remain central. Clean input, reliable structure, and efficient pre-processing are still core needs in any visual system, especially when dealing with real-world challenges, limited hardware, variable environments, and the growing demand for accuracy and speed.

Low-level vision lays the foundation for how machines see. It doesn't interpret, label, or guess—it prepares. Finding edges, cleaning up noise, and estimating depth gives higher-level systems something solid to work with. Even in an age of deep learning, this stage is still part of the process. It brings speed, stability, and clarity—especially when resources are limited or environments are unpredictable. Whether in a phone, drone, robot, or diagnostic tool, or any real-time embedded system, it continues to serve an essential purpose: making sure the system sees clearly before trying to understand anything at all today.

Advertisement

How an AI avatar generator creates emotionally aware avatars that respond to human feelings with empathy, transforming virtual communication into a more natural and meaningful experience

Explore how AI is transforming drug discovery by speeding up development and improving treatment success rates.

Learn how AI is being used by Microsoft Dynamics 365 Copilot to improve customer service, increase productivity, and revolutionize manufacturing support.

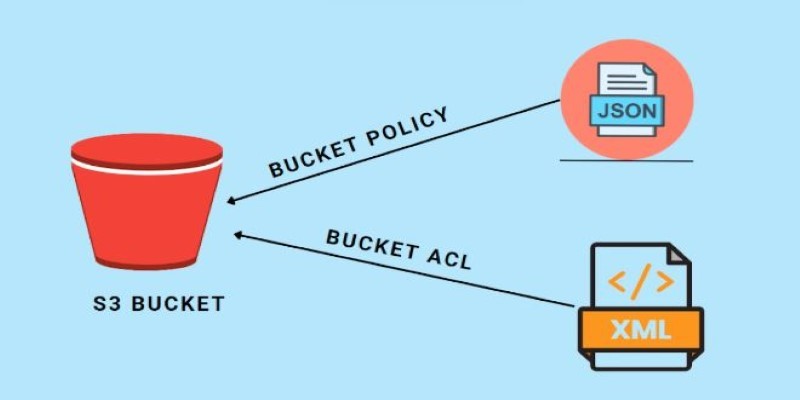

How AWS S3 buckets and security work to keep your cloud data protected. This guide covers storage, permissions, encryption, and monitoring in simple terms

Generate millions of transects in seconds with Polars and GeoPandas, enabling fast, scalable geospatial analysis for planning

Discover how AI in weather prediction boosts planning, safety, and decision-making across energy, farming, and disaster response

Learn the top 5 strategies to implement AI at scale in 2025 and drive real business growth with more innovative technology.

Learn how AI innovations in the Microsoft Cloud are transforming manufacturing processes, quality, and productivity.

Learn to boost PyTorch with custom kernels, exploring speed gains, risks, and balanced optimization for lasting performance

How NVIDIA’s Neuralangelo is redefining 3D video reconstruction by converting ordinary 2D videos into detailed, interactive 3D models using advanced AI

How interacting with remote databases works when using PostgreSQL and DBAPIs. Understand connection setup, query handling, security, and performance best practices for a smooth experience

AI is reshaping the education sector by creating personalized learning paths, automating assessments, improving administration, and supporting students through virtual tutors. Discover how AI is redefining modern classrooms and making education more inclusive, efficient, and data-driven