Advertisement

OpenAI has long been at the center of AI conversations, mostly for its work on language models like GPT-3 and GPT-4. But something new is taking shape—something beyond text, screens, and conversations. The company is quietly shifting attention to robotics. This isn't about experiments or side projects; it's starting to look like a deliberate new phase.

With job openings, research efforts, and partnerships in motion, OpenAI may be preparing to bring its AI from the digital world into the physical one. The signs are growing clearer—and the implications could be significant.

The clearest signals of OpenAI’s robotics push are its new job listings. Positions like “robotics software engineer” and “embodied AI researcher” have been posted with mentions of working on real-world robotic arms and full humanoid systems. Some of these listings even reference collaboration with Figure AI, a startup developing humanoid robots aimed at general-purpose tasks. These aren’t just research roles—they suggest OpenAI is working toward practical deployment.

This renewed focus comes after a quiet period. In 2019, OpenAI impressed the AI world by teaching a robotic hand to solve a Rubik’s Cube using reinforcement learning. But the company later shut that robotics team down in 2021 to focus on language and multimodal systems. Now, with stronger models and more data, OpenAI seems ready to revisit robotics—with better tools in hand.

GPT-4 and its successors bring a different capability to the table. These models are far better at understanding language, vision, and even planning. Combined with large-scale compute, OpenAI may not need to train robotic control policies from scratch anymore. Instead, it could build on existing intelligence to manage physical tasks, using the same foundation model across different types of systems.

There's a good reason why OpenAI is turning toward robotics at this moment. The broader industry is pushing toward general-purpose robots, not just factory arms or cleaning bots. Companies like Figure, Agility Robotics, and 1X are working on bipedal or multi-use robots for real-world environments. These companies are trying to develop machines that can be directed by natural language and adapt to unfamiliar tasks—something OpenAI’s large models are already trained to do.

OpenAI is involved directly. It’s invested in 1X Technologies and is reportedly working closely with Figure AI. These aren’t surface-level partnerships—they point to a strategic interest in becoming the intelligence layer for the physical world. Instead of building robots from scratch, OpenAI could focus on being the brain behind them.

The growing availability of computing is another factor. OpenAI now has the infrastructure to handle massive, multimodal training—bringing together video, vision, text, and sensor data. AI in robotics demands this kind of scale. Real-world environments are messy and unpredictable, and systems need to be able to process high-volume inputs and respond in real time.

OpenAI has also pulled in talent with deep experience in robotics and embodied systems. New teams have reportedly formed around "embodied AI" initiatives, and several engineers with experience in robot manipulation and control have joined the company. These hires reflect a serious investment, not an experiment.

OpenAI’s robotics effort likely revolves around connecting its foundation models to robotic control systems. This means bridging GPT-like models with physical sensors, cameras, and actuators. Unlike text, which can be processed with some delay, physical systems demand speed and accuracy—robots must make decisions on the fly.

To handle that, OpenAI might use a hybrid setup. Foundation models could be responsible for high-level planning and instruction interpretation, while lower-level control modules handle the timing-sensitive actions. This setup is common in AI in robotics, where the “brain” delegates execution to more responsive sub-systems.

The company may not build its own robots at all. Instead, it could focus on making its models compatible with robots developed by other firms. This would allow OpenAI to stay focused on AI while letting partners specialize in mechanical design. It’s a strategy that mirrors how operating systems or cloud services work across various devices—OpenAI could provide the general-purpose interface between language and action.

Evidence suggests OpenAI is already training models using robotics data in both simulated and real-world settings. Some researchers have noted updates to internal environments designed to teach embodied agents new skills. These could help the models generalize before being deployed on actual hardware.

The big question is what happens if OpenAI succeeds. Most current robots are still locked into narrow tasks—loading boxes, cleaning floors, or moving parts on an assembly line. But a robot powered by a general AI model could adapt on the fly. It could take instructions like “clean up this mess” or “help me with groceries” and figure out what to do, even in a new environment.

This flexibility is what many in the industry are aiming for. Using large language and vision models to guide robots could make them more intuitive and capable. AI in robotics may finally break out of the lab and into everyday settings—from healthcare and logistics to offices and homes.

But progress brings new challenges. Safety grows harder when AI acts in the real world. If a robot misinterprets a command or takes unintended action, who’s responsible? These questions aren’t new, but they gain urgency as robots become more autonomous and less predictable. OpenAI, which already handles safety and alignment in language models, will need to extend those same concerns into robotics.

Still, OpenAI robotics appears to be about more than solving specific problems. It’s about pushing toward a broader idea: that intelligent systems should not just speak and understand—they should see, move, and interact. The company seems to be building toward that future.

OpenAI's move into robotics marks a shift from processing language to interacting with the physical world. With advanced models, strong infrastructure, and growing partnerships, it's positioned to shape how AI functions beyond screens. While details are still emerging, the direction is clear: robotics is no longer a side project. If progress continues, OpenAI could help create general-purpose robots powered by the same intelligence behind its widely used language models.

Advertisement

Discover how an AI platform is transforming newborn eye screening by improving accuracy, reducing costs, and saving live

OpenAI robotics is no longer speculation. From new hires to industry partnerships, OpenAI is preparing to bring its AI into the physical world. Here's what that could mean

Learn to boost PyTorch with custom kernels, exploring speed gains, risks, and balanced optimization for lasting performance

Discover why technical management continues to play a critical role in today's AI-powered workspaces. Learn how human leadership complements artificial intelligence in modern development teams

Can $600 million change the self-driving game? This AI freight company isn’t chasing hype—it’s delivering real-world results. Here's why the industry is paying close attention

LangFlow is a user-friendly interface built on LangChain that lets you create language model applications visually. Reduce development time and test ideas easily with drag-and-drop workflows

How an AI avatar generator creates emotionally aware avatars that respond to human feelings with empathy, transforming virtual communication into a more natural and meaningful experience

How NVIDIA’s Neuralangelo is redefining 3D video reconstruction by converting ordinary 2D videos into detailed, interactive 3D models using advanced AI

Compare Power BI vs Tableau in 2025 to find out which BI tool suits your business better. Explore ease of use, pricing, performance, and visual features in this detailed guide

Meta is restructuring its AI division again. Explore what this major shift in the Meta AI division means for its future AI strategy and product goals

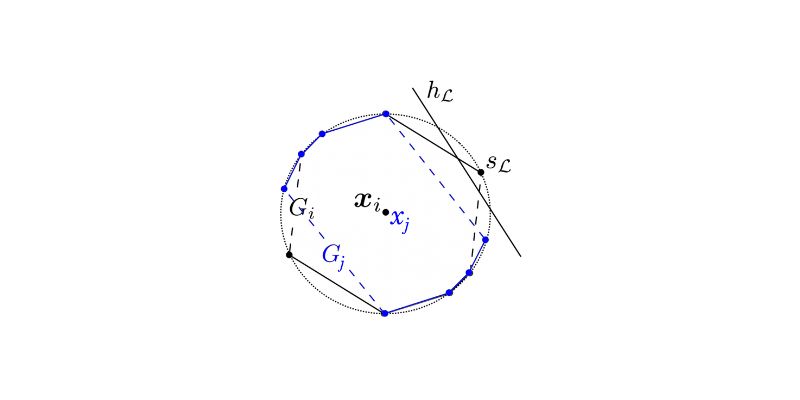

Explore statistical learnability of strategic linear classifiers with simple walkthroughs and practical AI learning concepts

Learn the top 5 strategies to implement AI at scale in 2025 and drive real business growth with more innovative technology.